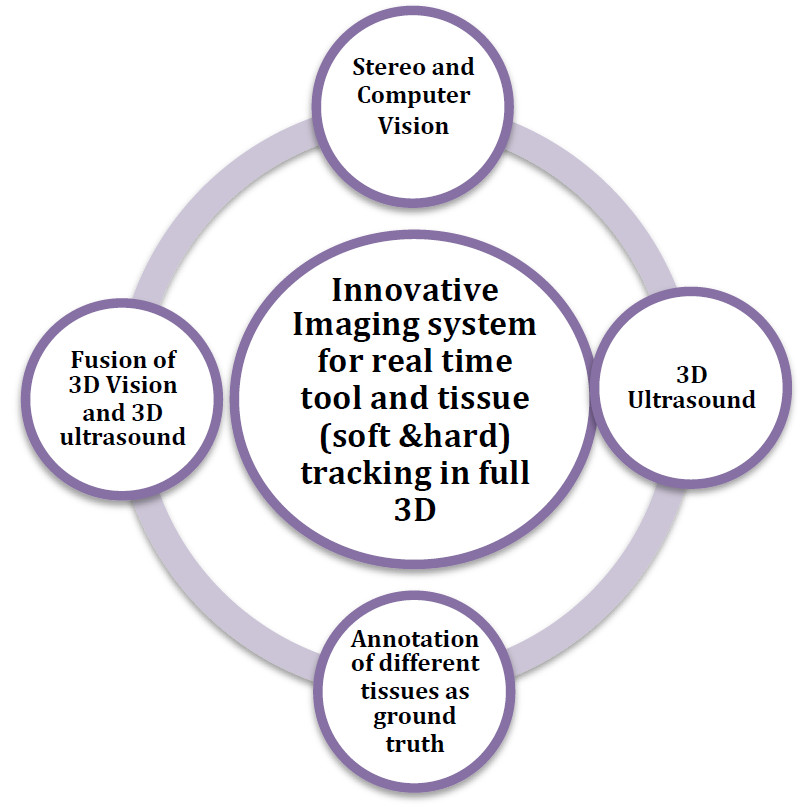

Despite the introduction of minimally invasive techniques to general surgery nearly 30 years ago, many of the more advanced surgical procedures are still too difficult to perform and require a steep learning curve. Limited dexterity and reduced perception have curtailed surgeon’s abilities to achieve comparable results to open surgery. Robot assisted surgery can drastically enhance the capabilities of a surgeon but to achieve its full potential real time tracking of surgical tools is highly desired for enhanced situational awareness. As tools become more dexterous and articulate, their pose tracking inside the body becomes more complicated. Although there are kinematic models available to describe tool motion, they tend to be too inaccurate to perform precise localization. Projects are available in this area, in collaboration with the Robotics and autonomous systems Department at QUT, to investigate how to solve the localization and tracking problem of surgical tools by development of advanced imaging devices and image processing algorithms via fusion of 3D vision and ultrasound imaging modalities. Towards this aim, the endoscopic video feed from a set of miniaturised stereo cameras in real time will be processed for 3D labelling of soft and hard (bone) tissue, as well as in identification of the standard visualisation planes of the surgical tools. 3D ultrasound transducers strategically placed outside the surgical area of interest will provide non-invasive external tracking and support a whole range of tissue typing/characterization applications.

If you are interested in this topic and you are looking for further information, please contact me: email d3.fontanarosa@qut.edu.au, ph. 0403862724.

Check my START database entries: START